Plenoptic cameras, known as light field cameras, best capture light fields. How do robotic systems use light field data? What new features does this type of camera create? How do you calibrate them properly? In this article, we take a closer look at the concept with the help of DLR’s camera introduction.

Light field

A light field is a 4D dataset that offers high potential to improve the perception of future robots. Its superior precision and detail can provide key advantages to automated object recognition. The 4D data is created in the following way: A 2D pixel matrix of the sensor exists, which can be displaced in 3D space and record the light field. However, this would be 5D. Since it makes no difference in the calculation if the camera is moved in the direction of the light beam, one dimension can be deleted from this equation – a 4D data set is created.

Compared to conventional 2D images, a 4D light field thus has two additional dimensions resulting from spatial displacement and reflecting the depth of the scene encountered. Through them, it is possible to obtain additional information and infer different data products. Most often, the industrial application of the light field exploits 2D images focused on a certain distance or 3D depth images (point clouds).

FEATURES OF THE LIGHT FIELD USING THE PLENOPTIC FUNCTION

Conventional cameras recreate the vision of the human eye. The camera views a scene from a fixed position and focuses on a specific object. Hence, they focus a selection of light rays from a scene to form a single image.

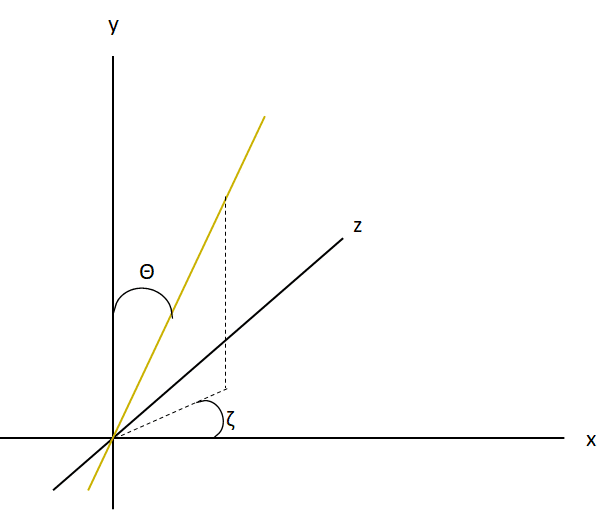

In contrast, a light field contains not only the light intensity at the position where a light ray hits the camera sensor, but also the direction from which the ray arrives. The intensity, i.e. the amount of light measured by the sensor, at position (u, v) and the directional angles (Θ, ζ) are information that a 4D light field creates using rendering.

Thanks to the additional two dimensions, other data products are possible. 2D images occur most frequently. For example, these are focused on a certain distance or have an extended depth of field. An additional possibility is to create a 3D depth image from the 4D light field data. With a single image capture, it is now feasible to create multiple directly related image products.

In order to put the light field into application and use its information productively, it requires associated software or an algorithm that evaluates this data. Due to their four dimensions, light field images are very large and therefore require a lot of computing power. For this reason, the industrial use of plenoptic cameras is still in its infancy – only recently have powerful computers made it possible to process this data in sufficiently short periods of time. From the image to the 3D point cloud, this is currently possible in about 50 ms.

But how exactly must a camera be designed that can capture the light field in such a way?

Plenoptic cameras

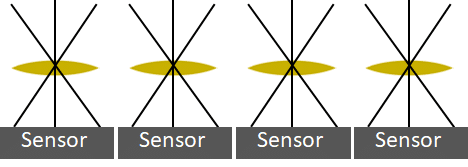

Plenoptic cameras are created by modifying conventional cameras. Due to the theoretical infinite depth of field and the possibility of refocusing, it is possible to subsequently shift the plane of focus in the object space. Due to this additional depth information, a plenoptic camera can also be used as a 3D camera.

To image the plenoptic function, there are basically two physical possibilities:

- Light field acquisition with micro lenses

- Light field acquisition with camera arrays

Light field acquisition with micro lenses

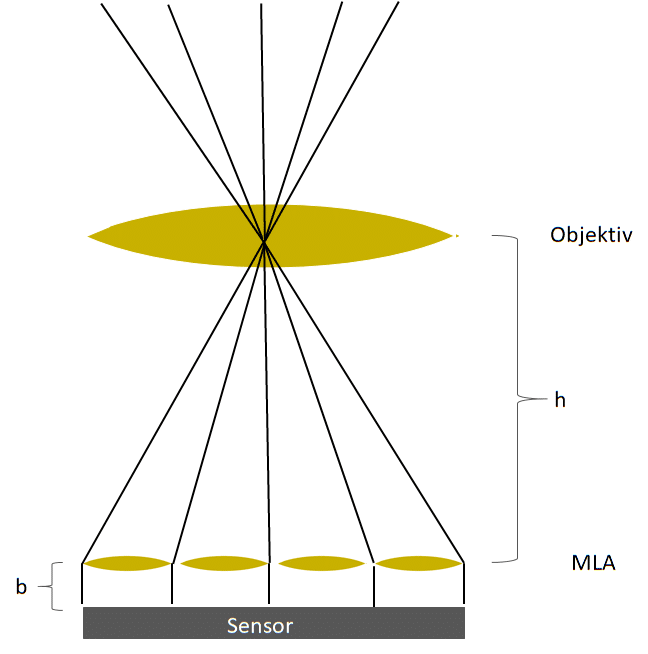

In a micro lens array (MLA), a plate with micro lenses sits in front of the camera sensor. A micro lens is a miniaturized control and focusing lens. It bundles the light optimally to prevent light rays from hitting the edge of the image sensor. In this way, it prevents distortions or differences in brightness from occurring. Depending on the type of light, the micro lens must be made of a different material:

- Wavelength between 150 nm and 4 μm: silicon dioxide

- Wavelength between 1.2 μm and 15 μm (infrared light): silicon

The MLA is a matrix of individual micro lenses, allowing it to capture the scene from different angles. Each of these lenses has a diameter of between a few micrometers and a few millimetres. The field of view covers several hundred pixels. The incident light rays refract in the micro lenses, in accordance with the laws of physics. Thus, depending on the direction of incidence, the ray falls on a certain sensor pixel below the micro lens. Thus, the position (u, v) corresponds to a micro lens and the direction angles (Θ, ζ) correspond to a sensor pixel below this lens.

Due to their small size in the sub-millimeter range, light-field microlens arrays are used in particular in the life sciences – e.g. installed in microscopes.

Light field acquisition with camera arrays

A camera array is basically a macroscopically extended approach of micro lenses. The individual cameras are controlled via an Ethernet switch, for example. The data is retrieved via an Ethernet interface. The retrieval of the resulting data works via an Ethernet interface to achieve high processing speeds.

To enable the image data from the various cameras to be converted into a data product, the cameras are arranged in a known regular pattern. Only in this way can the algorithm behind it calculate the shifts correctly.

Through the multiple cameras, a scene is observed from different positions. Each camera has different parameters:

- Focal length of the imaging optics

- Focus settings

- Exposure time

- Aperture

- Recording time

- Use of spectral and polarization filters

With the help of a camera array, the complete inspection of a scene (e.g. 3D survey), the determination of spectral properties (e.g. color and chromatic aberration) or the acquisition of dielectric properties (e.g. polarization) is possible.

Camera arrays are not suitable for the microscopic range due to their size. Instead, they show their advantages in larger applications: in industrial production, special camera arrays are used for detection in the range of a few centimeters (e.g. screws) to meters (e.g. for pallets).

Distributed camera arrays

Distributed camera arrays consist of several cameras, which are still modeled as single cameras. This means that the entire camera array cannot be described with common extrinsic parameters (= position of the camera in the world coordinate system). In addition, the spatial coverage areas often do not overlap. Application areas of these camera arrays are surveillance systems for different premises or industrial inspection, where only different object areas have to be covered.

Such systems can contain homogeneous (e.g. surveillance systems) as well as heterogeneous (e.g. inspection system) sensors. Here, the recorded data complement each other. To avoid overlapping, the number of cameras should always be chosen minimally with respect to the task.

Compact camera arrays

The cameras of a compact camera array are modeled together and therefore have additional extrinsic parameters by which it is possible to describe the position of the entire camera array with respect to the scene. In this case, the spatial coverage areas usually overlap considerably.

Such a system usually contains homogeneous sensors. The acquired information can be complementary as well as distributed (only a joint evaluation of the images provides the desired information). Compact camera arrays are also capable of capturing uni- (variation of a single acquisition parameter) and multivariate (variation of multiple acquisition parameters) image series.

Compact camera arrays are becoming increasingly important for many applications because they offer comprehensive capabilities to fully capture the visually detectable information of a scene.

Calibration of Plenoptic Cameras

In practical applications, it is often not only optical detection that is important, but also precise measurement of the detected workpieces. Correctly calibrated, plenoptic cameras can also be used as measuring systems: the metric information required for this purpose comes directly from the light field data of the calibration and the general properties of the plenoptic function.

However, commercially available plenoptic cameras usually provide distance values in non-metric units. This presents a hurdle for robotics applications, where metric distance values must be available. By separating the configuration of traditional plenoptic cameras from the new properties of plenoptic cameras, it is now possible to use traditional camera calibration methods to simplify the plenoptic camera alignment process and increase accuracy. Currently, accuracies in the sub-millimeter range can be achieved. For this purpose, the pinhole camera model is used as for a conventional camera.

The system uses two different input data types to perform these two steps of alignment. These data types are 2D images with an extended depth of field and 3D depth images.

Thus, the noise of the depth estimation no longer affects the estimation of traditional parameters, such as the focal length or the radial lens distortion. This results in further advantages:

- Application of different optimizations to the above input data. This makes it possible to reduce outliers for the particular data type in a more targeted way.

- Bisection is easier, making novel and faster initialization models for all parameters realistic.

- Mixing of models for lens and depth distortion as well as those for internal quantities (focal length f, distance b between MLA and sensor, distance h between MLA and objective lens) is avoided.

Plenoptic cameras are therefore able to shift the depth of field even in retrospect or to generate different data products from their images. In order to evaluate the data correctly, the calibration must be divided into two steps. This makes it possible to reduce the noise component, which in turn makes depth estimation possible. Plenoptic imaging techniques are therefore disruptive technologies that allow new application areas to be opened up and traditional imaging techniques to be developed further.

(Source: DLR, article updated on 2021/11/04)