Imagine sitting at your desk at FBI Headquarters and suddenly being tasked with the surveillance of several countries. Your supervisors are interested in the movement of certain vehicles and the construction of new secret bases in secluded areas.

More specifically, they want you to identify these vehicles on images taken by drones or satellites. Gladly, this task cannot upset you. You have friends at HD Vision Systems and they can help you with the power of Artificial Intelligence and oriented bounding boxes!

Basic Object Detection with Artificial Intelligence

With the rise of Artificial Intelligence, it has become possible to easily teach computers to retrieve information out of pictures. One such task is the computer-based detection of objects displayed in an image.

For computers this means, that they search the image for structures that they have already seen and can associate with known objects. Based on this analysis, they make a prediction what object is displayed in the image.

In this example we can see a cat. A computer also can see a cat. Cats are cool.

However, pure object detection was at first an academic accomplishment. In real world applications, one rarely faces the problem of being confronted with an image containing exactly one unknown object and whose type is to be determined. The reality is often different.

So, let’s take one step further and assume we have multiple different objects in an image and want to know the type of all of them. To be able to use this information down the line, we also want to know where each object is located in the image. This could be useful to send their location to a robot for further handling, for example.

Bounding Boxes

A straight forward way to handle multiple objects in an image is to have an algorithm take a look at the image and return precise information on:

- what objects the image contains,

- where the objects are located and

- what dimensions these objects have.

In other words, the algorithm puts a box around each object, a so-called bounding box, to mark the results. Usually, this comes together with the type of object or, as it is called in Machine Learning, a label.

Again, computers start this task with analyzing the structures of the image and refencing them to known objects. Only in this case this is not done for the whole image, but various parts of the image are considered separately. Afterwards, the found objects are not only identified, but their exact location and dimensions are also predicted.

We are still seeing a cat. We already knew where the cat is on the image. Our computer just caught up to us an knows its location now as well.

The FBI is amazed. You have identified the respective vehicles.

But you did too well. While you have been thinking that you gave them more data than they could possibly use, they now want information on the direction the vehicles are moving as well.

Can this be done?

Directed and Oriented Bounding Boxes

At this point, it is possible to tell what is where in an image.

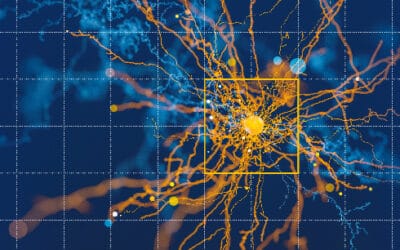

Take for example, an aerial photo of a parking space. On this parking space, the vehicles are parked in different orientations, none of which align with the orientation the image is taken in. This means that while we have a good understanding of the location where vehicles are parked in, the dimensions we got so far are not exactly the dimensions of the vehicles.

To refine the result, we not only detect bounding boxes, but their orientation as well. This allows the bounding boxes to be rotated in a way, that they align with the orientation of the detected object, thus only encapsulating the object and as little space around them as possible. In consequence, we are detecting oriented or minimal bounding boxes.

The cat is going crazy. But it does not matter. Humans love cats. Neither does it matter to our computer. It accepts the cat’s craziness and can still tell you reliably where your cat is and what way your cat is looking.

For the last steps, the vehicles have left the parking space and are now driving on a country road. Standing sideways to the road, it would be clear in what direction they are driving. But no one is on-site.

However, a car also has a well-defined front and back and this usually determines the direction the car is driving in. Especially on a country road.

Indeed, this last bit of information can also be extracted with seemingly simple altercations to the algorithm. One only has to add another prediction to the ones one already has (location, dimension, label). This last prediction either identifies only the orientation of the object or its direction.

The algorithm now can tell the direction of objects in a picture – if an object can have an orientation.

In total, with oriented bounding boxes we can tell what is visible in an image, where these objects are located, how they are oriented and at what side of the box the top of the object is looking.

You have successfully delivered. Your supervisors are happy.

You may come back tomorrow to face new challenges at the FBI.