When we learn a new skill, the neural network of our brain strengthens existing synaptic connections and forms new ones between neurons. The more synapses are generated during the learning process and strengthened by repeated recalling, the better the learning success. Artificial neural networks try to copy this complex process – but do artificial neural networks also improve through repeated recalling of data?

Artificial neural networks

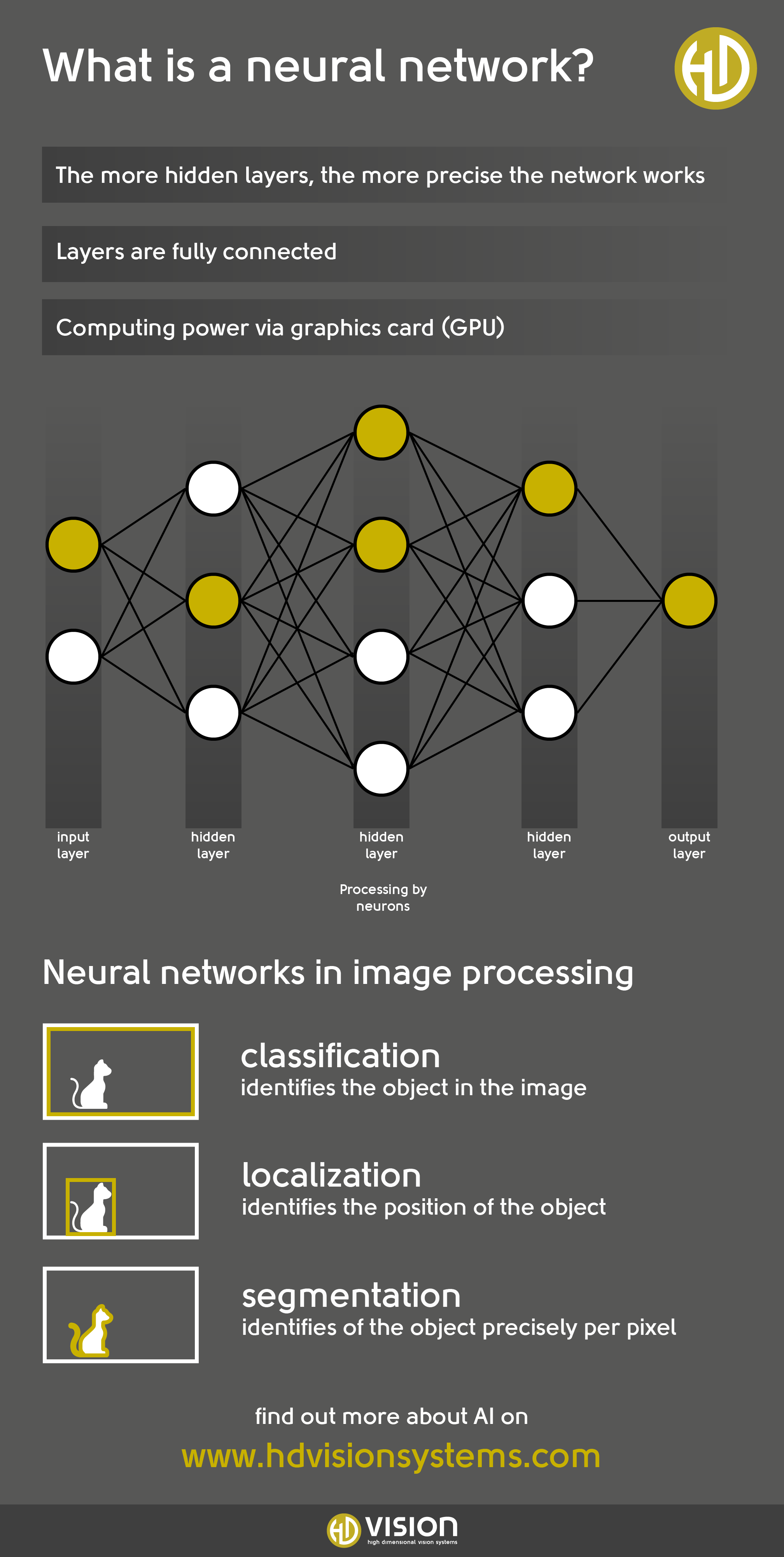

Artificial neural networks imitate the structure and information processing processes of a human brain. Unlike its biological archetype, they work with numbers instead of neurotransmitters. Therefore, an artificial neural network is a mathematical construct.

It consists of an input layer, an output layer, and a varying number of hidden layers. As the complexity of the task increases, so does the number of parameters and thus the layers required for processing. Thus, a multi-layered algorithm solves complex mathematical problems even without explicit pre-programming. The learning process of particularly multilayered networks is therefore referred to as “deep learning“.

Thus, neural networks are used in speech analysis and generation, image processing or pattern recognition.

The learning process of neural networks

In the learning process, artificial neural networks analyze patterns or data and form problem-solving models on the basis of this. These are developed using training data, with the probability of success varying based on data quality and quantity.

The training process begins with the input of data at the input layer. Thereupon, the neurons of the hidden layers evaluate and weigh the data. At the output layer, the final computation of the result takes place. Each training run improves the result by reducing the errors of the calculation.

The learning rate describes how strongly the network adjusts the weighting of individual neurons with respect to detected errors after each run. The learning rate also determines the duration of the training process.

“Overfitting”

Overfitting

The human brain consolidates information through continuous repetition. With neural networks, you can achieve up to 100% correct evaluation after continuous training of the data as well. However, it is likely, that the results with different data will deteriorate after such a retraining.

This is because after a while, the system only reproduces the solutions determined from the training data. Thus, the algorithm only processes the training data correctly and does not obtain new results when new data is entered. Experts refer to this memorization of the training data as overfitting. Using an incorrect learning rate also leads to overfitting.

The more multi-layered the system, the longer the training time and thus a greater risk of overfitting. Incorrect weighting also occurs due to incorrect selection of test data or insufficient amount of data.

Correct use of test data

The use of a test data set and a blind test data set additional to the training data set prevents this. By using this, you can recognize overfitting by the fact that the accuracy of the results with the training data exceeds that of the test data. At this point, the training ends.

The blind test data is used for a final verification of the functionality of the system. If the algorithm produces correct results with this data, the system is valid. In the case of iterative models, stopping the training prematurely also prevents overfitting.

Dropout layer

Over-specialization of the models is also prevented by the use of a dropout layer. In this case, the system randomly switches off neurons of a layer during training. Thus, a different combination of neurons trains during each run, which prevents memorization of the training data. Experts call this a regularization method. Additionally, the use of a dropout layer improves training speed.

Correct selection and processing of the test data

To prevent bias in the models, it is important to determine relevant, expert correlations of the data in advance. In this way, you can avoid bias by identifying inappropriate or incorrect data, or by having too little data. Errors are also caused by incorrect labeling of the data. Also, a high learning rate leads to a suboptimal weighting during the evaluation.

When determining the learning rate, it is therefore important to compare suitable benchmarks for the data. It is also possible to gradually reduce the learning rate during training. The collection of a sufficiently large, valid sample and the correct handling of data and parameters thus also prevents overfitting.

Use neural networks for the visual quality inspection of metallic and complex workpieces

Worse results due to memorization

Artificial neural networks are based on the learning processes of the human cognitive system, but to date artificial neural networks cannot match the generalization performance of the brain with respect to data in the learning process. Instead, the neural networks memorize the results of the training set – therefore, they sometimes score worse after re-training.